This section documents best practices on how to deploy Drupal WxT to your chosen environment.

1 - Containers

For the (optional) container based development workflow this is roughly the steps that are followed.

Clone the docker-scaffold repository:

git clone https://github.com/drupalwxt/docker-scaffold.git docker

Note: The

dockerfolder should be added to your.gitignorefile.

Linux Environments

The following are the steps you should follow for a Linux based environment.

Create the necessary symlinks:

ln -s docker/docker-compose.base.yml docker-compose.base.yml

ln -s docker/docker-compose.ci.yml docker-compose.ci.yml

ln -sf docker/docker-compose.yml docker-compose.yml

Create and adjust the following Makefile:

include .env

NAME := $(or $(BASE_IMAGE),$(BASE_IMAGE),drupalwxt/site-wxt)

VERSION := $(or $(VERSION),$(VERSION),'latest')

PLATFORM := $(shell uname -s)

$(eval GIT_USERNAME := $(if $(GIT_USERNAME),$(GIT_USERNAME),gitlab-ci-token))

$(eval GIT_PASSWORD := $(if $(GIT_PASSWORD),$(GIT_PASSWORD),$(CI_JOB_TOKEN)))

DOCKER_REPO := https://github.com/drupalwxt/docker-scaffold.git

GET_DOCKER := $(shell [ -d docker ] || git clone $(DOCKER_REPO) docker)

include docker/Makefile

Build and setup your environment with default content:

# Composer install

export COMPOSER_MEMORY_LIMIT=-1 && composer install

# Make our base docker image

make build

# Bring up the dev stack

docker compose -f docker-compose.yml build --no-cache

docker compose -f docker-compose.yml up -d

# Install Drupal

make drupal_install

# Development configuration

./docker/bin/drush config-set system.performance js.preprocess 0 -y && \

./docker/bin/drush config-set system.performance css.preprocess 0 -y && \

./docker/bin/drush php-eval 'node_access_rebuild();' && \

./docker/bin/drush config-set wxt_library.settings wxt.theme theme-gcweb -y && \

./docker/bin/drush cr

# Migrate default content

./docker/bin/drush migrate:import --group wxt --tag 'Core' && \

./docker/bin/drush migrate:import --group gcweb --tag 'Core' && \

./docker/bin/drush migrate:import --group gcweb --tag 'Menu'

Modern OSX Environments

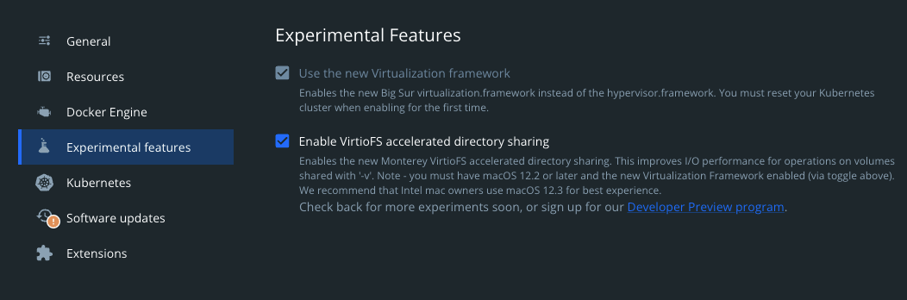

If you have Docker for Desktop and a new enough OSX environment (Monterey or higher) then the steps are the exact same as those for the Linux environment given above.

All that is required in advance is to enable VirtioFS accelerated directory sharing which you can see in the attached picture below.

Docker for Desktop VirtioFS

Image: Drupal / CC-BY-CA

For older environments you may still use mutagen which is discussed below.

Legacy OSX Environments (Mutagen)

While this is fixed with the new virtualization framework discussed above.

For older environments mutagen will have to be used instead and as such requires a few additional steps.

# Mutagen Setup

export VOLUME=site-wxt-mutagen-cache

docker volume create $VOLUME

docker container create --name $VOLUME -v $VOLUME:/volumes/$VOLUME mutagenio/sidecar:0.13.0-beta3

docker start $VOLUME

mutagen sync create --name $VOLUME --sync-mode=two-way-resolved --default-file-mode-beta 0666 --default-directory-mode-beta 0777 $(pwd) docker://$VOLUME/volumes/$VOLUME

# Create symlinks

ln -s docker/docker-compose.mutagen.yml docker-compose.mutagen.yml

# Composer install

export COMPOSER_MEMORY_LIMIT=-1 && composer install

# Make our base docker image

make build

# Bring up the dev stack

docker compose -f docker-compose.mutagen.yml build --no-cache

docker compose -f docker-compose.mutagen.yml up -d

# Install Drupal

make drupal_install

# Development configuration

./docker/bin/drush config-set system.performance js.preprocess 0 -y && \

./docker/bin/drush config-set system.performance css.preprocess 0 -y && \

./docker/bin/drush php-eval 'node_access_rebuild();' && \

./docker/bin/drush config-set wxt_library.settings wxt.theme theme-gcweb -y && \

./docker/bin/drush cr

# Migrate default content

./docker/bin/drush migrate:import --group wxt --tag 'Core' && \

./docker/bin/drush migrate:import --group gcweb --tag 'Core' && \

./docker/bin/drush migrate:import --group gcweb --tag 'Menu'

Cleanup

If you wish to have a pristine docker environment you may execute the following commands.

docker rm $(docker ps -a -q) --force

docker rmi $(docker images -q) --force

docker volume prune -f

For those still using Mutagen you may also need to execute the following command:

mutagen sync terminate <sync_xxxxx>

2 - Kubernetes

Introduction

This document represents a high-level technical overview of how the Helm Chart for Drupal WxT was built and how we envision Drupal itself should be architected in the cloud to support any of the Government of Canada procured cloud service providers (AWS, Azure, and GCP). It should be noted that this Helm chart would also work in an on-premise environment with the appropriate Kubernetes infrastructure.

A key mandate when creating this architecture was to follow the Open Source Directive as given by the Treasury Board Secretariat (C.2.3.8) which states that you should try to use open standards and open source software first. Additionally, where possible all functionality should be exposed as restful services and leverage microservices via a containerized approach (C2.3.10).

We are leveraging a microservices design pattern utilizing immutable and scanned images through containerization running on Kubernetes with a platform that has been built and open sourced by Statistics Canada. While the platform will be discussed briefly to provide context the bulk of the document discusses how Drupal is installed and configured on top of it.

Kubernetes

Kubernetes orchestrates the computing, networking, and storage infrastructure on behalf of user workloads. It assigns workloads and resources to a series of nearly identically-configured virtual machines.

Kukbernetes supports workloads running anywhere, from IoT devices, to private cloud and all the way to public cloud. This is possible due to Kubernetes’ pluggable architecture, which defines interfaces that are then implemented for the different environments. Kubernetes provides an Infrastructure as Code environment defined through declarative configuration. Because Kubernetes abstracts away the implementation of the computing environment, application dependencies such as storage, networking, etc., applications do not have to concern themselves with these differences.

Kubernetes is backed by a huge (10,000+) and vibrant growing community, consisting of end users, business, vendors and large cloud providers.

Key Points

This architecture brings many benefits to the Government of Canada:

- Support for hybrid workloads (Linux and Windows), deployed using the same methodology

- Abstraction of underlying hardware (“cattle rather than pets”) enabling an automated, highly-available and scaleable infrastructure for microservices

- Declarative configuration enabling Infrastructure as Code allowing for deployment automation, reproducibility and re-use

- Constructs to support advanced deployment patterns (blue/green, canary, etc.) enabling zero-downtime deployments

- Platform-level tooling for traffic handling (routing, error recovery, encyption, etc.), monitoring, observability and logging, and secrets management

Kubernetes is supported across all cloud service providers (fully managed and self managed), preventing vendor lock-in. Managed offerings are available from Google, IBM, Azure, Digital Ocean, Amazon, Oracle and more. The choice whether to roll your own, using a managed service (AKS, EKS, GKE) or a Platform as a Service (OpenShift, Pivotal) is up to the organization to decide based on their requirements and risks. Our preference is to stay as close as possible to the open source version of Kubernetes as well as tooling in order to remain compatible with the different Kubernetes offerings (raw, managed, platform, etc.).

Government

Kubernetes is being actively investigated and/or used by many departments across the Government of Canada. Departments are starting to collaborate more and work together towards a common, well-vetted solution and this is why we have have Open Sourced our platform on the GC Accelerators hoping to foster this collaboration and form a community of practice.

Provided below is the Terraform (Infrastructure as Code) necessarily to install the Azure Kubernetes Service Infrastructure as well as configure with optional platform components (RBAC, Service Mesh, Policies, etc).

Drupal WxT on Kubernetes

A managed Drupal Platform as a Service is a strong candidate to take advantage of what a Kubernetes platform offers. The design enables a quick onboarding of new workloads through the repeatable deployment methodology provided by Kubernetes.

Kubernetes

Recommendation: Kubernetes

Kubernetes is the basis of the Drupal platform and was further discussed above.

The whole Drupal application stack can be easily installed in a distributed fashion in minutes using our Helm chart, The chart facilitates a managed service workflow (rolling updates, cronjobs, health checks, auto-scaling, etc.) without user intervention.

Ingress controller

Recommendation: Istio

The ingress controller is responsible for accepting external HTTPS connections and routing them to backend applications based on configuration defined in Kubernetes Ingress objects. Routing can be done by domain and/or path.

Varnish

Recommendation: Varnish

Varnish is a highly customizable reverse proxy cache. This will aid in supporting a large number of concurrent visitors as the final rendered pages can be served from cache. Varnish is only required on the public environment and is not used in the content staging environment.

Nginx can technically address some of the cache requirements needed, however the open source version does not support purging selective pages. We need to clear caches based on content being updated / saved which Varnish supports along with the Expire Drupal module quite readily

Nginx

Recommendation: Nginx

Nginx is an open source web server that can also be used a reverse proxy, HTTP cache, and load balancer. Due to its root in performance optimization under scale, Nginx often outperforms similarly popular web servers and is built to offer low memory usage, and high concurrency.

Web (PHP-FPM)

Recommendation: PHP-FPM

Drupal runs in the PHP runtime environment. PHP-FPM is the process manager organized as a master process managing pools of individual worker processes. Its architecture shares design similarities with event-driven web servers such as Nginx and allows for PHP scripts to use as much of the server's available resources as necessary without additional overhead that comes from running them inside of web server processes.

The PHP-FPM master process dynamically creates and terminates worker processes (within configurable limits) as traffic to PHP scripts increases and decreases. Processing scripts in this way allows for much higher processing performance, improved security, and better stability. The primary performance benefits from using PHP-FPM are more efficient PHP handling and ability to use opcode caching.

Redis

Recommendation: Redis

Redis is an advanced key-value cache and store.

It is often referred to as a data structure server since keys can contain strings, hashes, lists, sets, sorted sets, bitmaps, etc.

Redis is particularly useful when using cloud managed databases to limit the overall database load and to make performance more consistent.

Database

Recommendation: MySQL or PostgreSQL

Drupal maintains its state in a database and while supports several types only MySQL or PostgreSQL should be considered. Personally, we highly recommend PostgreSQL based on the experience we had building / launching quite a few Drupal sites in the cloud with it. However both run quite well with minimal operational concerns. Additionally the Helm Chart supports connection pooling using either ProxySQL and / or PGBouncer depending on the database used.

Note: Our recommendation would be to use a managed database offering from the cloud providers for a production environment. Coupled with a managed file service, this removes all stateful components from the cluster enabling the best application experience possible.

Stateful Assets

Drupal stores generated CSS/JS assets and uploaded content (images, videos, etc.) in a file storage. As the architecture is designed to be distributed, this present some design considerations for us.

Azure Files (CIFS / NFS)

Fully managed file shares in the cloud that are accessible via Server Message Block (SMB) or NFS protocol. Support is provided for dynamically creating and using a persistent volume with Azure Files in the Azure Kubernetes Service.

For more information on Azure Files, please see Azure Files and AKS.

Note: This is currently our recommended choice as it results in a simpler installation in Azure then relying on an S3 compatible object store discussed below. Similar storage solutions exist with the other cloud providers.

3 - Azure App Service

This page provides an overview for the process of creating a monolith container to deploy to Azure App Service (appsvc). It assumes you already have your project setup to work with the docker-scaffold repository. For initial project setup using docker-scaffold, see the beginning of the container based development workflow here - Local Docker setup

Build the appsvc image

# Make our base docker image

make build

# Build the appsvc image

docker compose -f docker-compose.appsvc.yml up -d

Note: After making changes to the project, you will need to remove your base image and build it again. This will ensure all changed files are copied into the base image as needed.

Delete all Docker images

docker rmi $(docker images -q) --force

Tag appsvc image and push to Azure Container Registry (ACR)

Now that you have build your appsvc image, you need to tag and push it to the ACR in order to deploy to it App Service.

docker login MY-CONTAINER-REGISTRY.azurecr.io

docker tag site-XYZ-appsvc:latest MY-CONTAINER-REGISTRY.azurecr.io/site-XYZ-appsvc:[tag]

docker push MY-CONTAINER-REGISTRY.azurecr.io/site-XYZ-appsvc:[tag]

Once this is done, you should be able to see your new image in the ACR.

Build pipeline

In order to automate the build process using Azure DevOps, you can create a pipeline file in the root of your Drupal repo - Example pipeline file

This pipeline script will build the appsvc image and push it to your container registry. Make sure you have cretaed the required Service Connection in Azure DevOps (Git repository, ACR).

Notes

- By default, the appsvc image comes with Varnish and Redis. This can cause issues if your App Service environment is set to Scale Out. This is because Varnish and Redis store cached data in memory, that cannot be mapped to a storage account or another shared resource. This can cause your instances to have different data, and users can see content flip between old and new versions when they refresh their borwser. It is recommended to stay with one instance when using the appsvc cotainer as it comes configured.